Bans and conditions are coming down on artificial intelligence in the EU on Feb. 2, in line with the Artificial Intelligence Act (AI Act), signed in June and entering into force in August. The most concise official guide to the new law is the EU’s briefing.

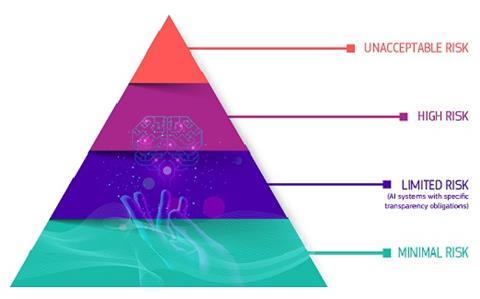

There will be four categories:

- AI with unacceptable risk – banned

- AI with high risk (for health, safety or rights) – subject to requirements and obligations

- AI with limited risk – subject to informational and transparency requirements

- AI with minimal risk – unrestricted

A summary from the EU Parliament reads in part as follows: “The new rules ban certain AI applications that threaten citizens’ rights, including biometric categorization systems based on sensitive characteristics and untargeted scraping of facial images from the internet or CCTV footage to create facial recognition databases. Emotion recognition in the workplace and schools, social scoring, predictive policing (when it is based solely on profiling a person or assessing their characteristics), and AI that manipulates human behaviour or exploits people’s vulnerabilities will also be forbidden.”

There are, however, exemptions: “The use of biometric identification systems (RBI) by law enforcement is prohibited in principle, except in exhaustively listed and narrowly defined situations. ‘Real-time’ RBI can only be deployed if strict safeguards are met, e.g. its use is limited in time and geographic scope and subject to specific prior judicial or administrative authorization. Such uses may include, for example, a targeted search of a missing person or preventing a terrorist attack. Using such systems post-facto (‘post-remote RBI’) is considered a high-risk use case, requiring judicial authorization being linked to a criminal offence.”

The EU is also laying down a “code of practice” for general purpose AI (GPAI) models, making them stringent for models with “high-impact capabilities.” The timeline for this extends to May 2025.

Enforcement and more development

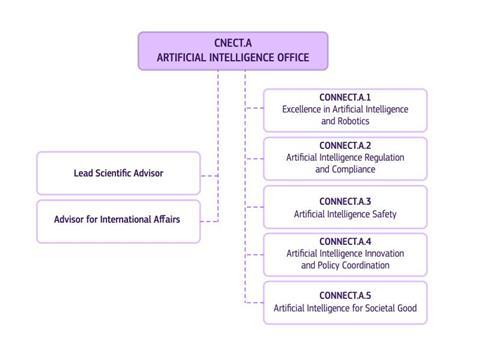

The European Commission (EC) established within its purview a European AI Office, with five units and two advisors.

Back in 2018 the EC established the European AI Alliance, which now has about 4,000 members and 6,000 “stakeholders” and has been holding annual European AI Alliance Assemblies.

Inspiration from the OECD

The law’s definition of AI draws from that of the OECD: “An AI system is a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.” With a view toward the eventual law, the OECD also revised its principles on AI last year.

And in 2023 it set up an AI Incidents Monitor (AIM), which uses “machine learning models” to identify and classify “incidents and hazards” in the reports of “reputable international media” – i.e., AI scanning the mainstream press for news of AI.

To use the mainstream press is already to make a selection, but the material the OECD scans is in fact mostly of headlines and abstracts selected and provided by Event Registry, which itself uses AI.

Event Registry was founded in 2017 – with Google funds – by the Jožef Stefan Institute (JSI), the biggest research institute in Slovenia, with such clients as Bloomberg, IBM, Nasdaq, Harvard, Stanford and the American geopolitical-intelligence publisher Stratfor. JSI is well connected in Europe, forming part of CERN, the European Research Council and many other bodies.

The lawmakers

Two committees within the EU Parliament drafted the proposals: Internal Market and Consumer Protection (IMCO) and Civil Liberties, Justice and Home Affairs (LIBE).

IMCO’s chief members are:

- Anna Cavazzini, chairman (Greens/EFA, Germany)

- Christian Doleschal, vice-chairman (European People’s Party, Germany)

- Nikola Minchev, vice-chairman (Renew, Bulgaria)

- Maria Grapini, vice-chairman (Socialists & Democrats, Romania)

- Kamila Gasiuk-Pihowicz, vice-chairman (Polish People’s Party, Poland)

LIBE’s chief members are:

- Javier Zarzalejos, chairman (Partido Popular, Spain)

- Marina Kaljurand, vice chairman (Socialists & Democrats, Estonia)

- Charlie Weimers, vice chairman (European Conservatives and Reformists, Sweden)

- Alessandro Zan (Socialists & Democrats, Italy)

- Estrella Galán, vice chairman (Left-wing consortium Sumar, Spain)

Some of these committee members were elected to Parliament as recently as 2024 and have undoubtedly not partaken in all of the negotiations.

The rapporteurs for said negotiations were Brando Benifei (Socialists and Democrats, IMCO) and Dragos Tudorache (Renew, LIBE), representing the European Parliament. Michael McNamara (Renew, Ireland, LIBE) served as co-chairman of the joint IMCO-LIBE Working Group, focusing on post-enactment oversight.

Stray details

According to the Norwegian law firm Wikborg Rein, France, Germany and Italy opposed the regulation of foundation models – large, general-purpose AI models (GPT, Llama, Claude, Grok et al.) – at the trilogue negotiations of 2023, but they appear to have capitulated since.

Valérie Hayer, President of Renew Europe, the political party to which several aforementioned committee members belong, set off on a four-day visit to Greenland and Iceland on Jan. 28, “after the latest provocations made by US President Donald Trump regarding the Arctic island, a self-governing territory of EU-Member State Denmark” (see our first article on Trump’s recent executive orders).

Some relevant documents

Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (2023, Biden admin. executive order)

Impact Assessment of the Regulation on Artificial intelligence (2021)

EU Charter of Fundamental Rights (2020)

White Paper on Artificial Intelligence (2020)

EU Guidelines for Trustworthy AI (2019)

Civil Law Rules on Robotics (2017)